Migrating ECS Fargate Cluster from x86-64 to arm-64 AWS Graviton Architecture with Fallback Strategy

Abstract

In recent years, the technology landscape has seen a significant shift towards sustainable and energy-efficient practices. This paradigm is not just limited to data centers, user interfaces. I.e. Apple MAC M1 arm-64 CPU; it extends to cloud infrastructure as well. This blog post explores the journey of migrating an AWS ECS (Elastic Container Service) Fargate cluster from x86-64 to arm-64 architecture, unlocking the benefits like energy efficiency, improved performance, and cost reduction.

Target Architecture

ARMs started on from portable and IoT devices and fully moved to high-end laptops (2020)

Both ARM and x86-architecture CPU designs can achieve high performance, ARM designs typically prioritize smaller form factors, extended battery life, reduced size, elimination of cooling needs, and, importantly, cost-effectiveness. This emphasis on cost efficiency is a key reason why ARM processors are prevalent in small electronic devices and mobile gadgets, including smartphones, tablets, and even Raspberry Pi systems. On the other hand, x86 architectures are more commonly found in servers, PCs, and laptops, catering to scenarios where speed and real-time flexibility are preferred, and there are fewer constraints on cooling and size considerations.

However, in recent time laptop vendors have switched from traditional x86-64 to arm-64 architecture. The most impressive was 11/10/2020 Apple announcement that new line of ARM-based computers (MacBook Air, Mini, Pro) will use Apple’s new M1 chip:

“Dramatically increased performance speed, battery life, and access to the fastest, most powerful apps in the world.”

ARM enters Cloud Infrastructure as AWS instance types (2021)

Amazon Web Services has launched EC2 instances powered by Arm-based AWS Graviton Processors (custom-built by AWS using 64-bit Arm Neoverse cores). AWS Graviton2 processors, based on the Arm architecture, promise:

- up to 40% better performance from comparable x86-based instances

- 20% less billing

- use up to 60% less energy than comparable EC2 instances.

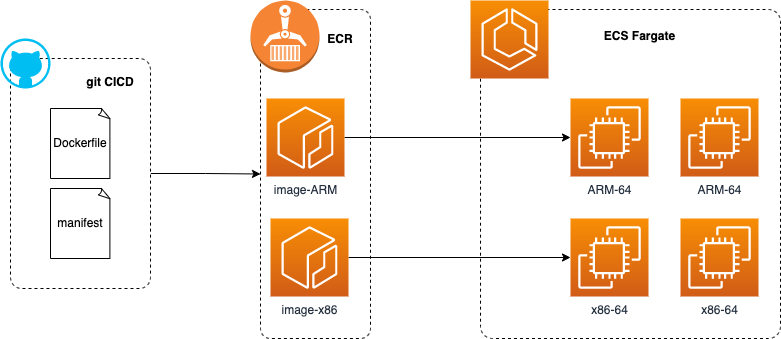

Sequence to adopt workload to run on ARN-based instance of AWS ECS Fargate

1. Rebuild application image to be ARM-compatible with docker buildx

In 2019 Docker announced partnership with ARM, since that time docker cli was extended with one more instrument - buildx

1

2

3

4

docker buildx ls

NAME/NODE DRIVER/ENDPOINT STATUS BUILDKIT PLATFORMS

default * docker

default default running 20.10.21 linux/amd64, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/arm/v7, linux/arm/v6

It allows to build different platform images from the same Dockerfile. First let’s create dedicated buildx instance compatible with arm64 and amd64:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

docker buildx create --use --platform=linux/arm64,linux/amd64 --name multi-platform-builder

docker buildx inspect --bootstrap

multi-platform-builder

[+] Building 15.3s (1/1) FINISHED

=> [internal] booting buildkit 15.2s

=> => pulling image moby/buildkit:buildx-stable-1 12.0s

=> => creating container buildx_buildkit_multi-platform-builder0 3.2s

Name: multi-platform-builder

Driver: docker-container

Nodes:

Name: multi-platform-builder0

Endpoint: unix:///var/run/docker.sock

Status: running

Buildkit: v0.12.4

Platforms: linux/arm64*, linux/amd64*, linux/amd64/v2, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/mips64le, linux/mips64, linux/arm/v7, linux/arm/v6

Now we are ready to build 2 images from Dockerfile and publish both of them to registry:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

docker buildx build --platform linux/amd64,linux/arm64,linux/arm/v7 -t rtsypuk/mutiarch:latest --push .

[+] Building 38.7s (14/14) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 68B 0.0s

=> [linux/arm/v7 internal] load metadata for docker.io/library/ubuntu:latest 4.3s

=> [linux/amd64 internal] load metadata for docker.io/library/ubuntu:latest 4.5s

=> [linux/arm64 internal] load metadata for docker.io/library/ubuntu:latest 4.5s

=> [auth] library/ubuntu:pull token for registry-1.docker.io 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [linux/amd64 1/2] FROM docker.io/library/ubuntu:latest@sha256:e6173d4dc55e76b87c4af8db8821b1feae4146dd47341e4d431118c7dd060a74 0.0s

=> => resolve docker.io/library/ubuntu:latest@sha256:e6173d4dc55e76b87c4af8db8821b1feae4146dd47341e4d431118c7dd060a74 0.0s

=> [linux/arm/v7 1/2] FROM docker.io/library/ubuntu:latest@sha256:e6173d4dc55e76b87c4af8db8821b1feae4146dd47341e4d431118c7dd060a74 0.0s

=> => resolve docker.io/library/ubuntu:latest@sha256:e6173d4dc55e76b87c4af8db8821b1feae4146dd47341e4d431118c7dd060a74 0.0s

=> [linux/arm64 1/2] FROM docker.io/library/ubuntu:latest@sha256:e6173d4dc55e76b87c4af8db8821b1feae4146dd47341e4d431118c7dd060a74 0.0s

=> => resolve docker.io/library/ubuntu:latest@sha256:e6173d4dc55e76b87c4af8db8821b1feae4146dd47341e4d431118c7dd060a74 0.0s

=> CACHED [linux/amd64 2/2] RUN apt-get update 0.0s

=> CACHED [linux/arm64 2/2] RUN apt-get update 0.0s

=> CACHED [linux/arm/v7 2/2] RUN apt-get update 0.0s

=> exporting to image 34.1s

=> => exporting layers 0.0s

=> => exporting manifest sha256:6a22b3fbf657aeafc4b23af8a093a733a5e630b00eaaf41fdddcd3bdb1ed416a 0.0s

=> => exporting config sha256:96b8e0a7ea4db49400aac3b4b7ee7195c749080b46bdd7cb7272c966acfaac6a 0.0s

=> => exporting manifest sha256:faad85867df328db47f97d60f3aa650c954d3d1f950a818d88c625389397b7ba 0.0s

=> => exporting config sha256:3809135392372a2fdba774b7f3b862b07bfee10b150f0f9167e4a074d327bd5e 0.0s

=> => exporting manifest sha256:987173987f663f5ab31c1403155c23df039b331b6390b51a0c56df41a93fd575 0.0s

=> => exporting config sha256:f645992b6019a56f2770cb045576974a46599486df5883a51ec80a5db36857cf 0.0s

=> => exporting manifest list sha256:46ed5729ad9766004f06af5794d035e9b7b6f4babd0989aed7e924be50510f50 0.0s

=> => pushing layers 31.5s

=> => pushing manifest for docker.io/rtsypuk/mutiarch:latest@sha256:46ed5729ad9766004f06af5794d035e9b7b6f4babd0989aed7e924be50510f50 2.6s

=> [auth] rtsypuk/mutiarch:pull,push token for registry-1.docker.io

When inspecting images, we can observe that there is also a manifest file that has references to each image with its SHA256 digest and details of the compatible platform:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

docker buildx imagetools inspect docker.io/rtsypuk/mutiarch:latest

Name: docker.io/rtsypuk/mutiarch:latest

MediaType: application/vnd.docker.distribution.manifest.list.v2+json

Digest: sha256:46ed5729ad9766004f06af5794d035e9b7b6f4babd0989aed7e924be50510f50

Manifests:

Name: docker.io/rtsypuk/mutiarch:latest@sha256:6a22b3fbf657aeafc4b23af8a093a733a5e630b00eaaf41fdddcd3bdb1ed416a

MediaType: application/vnd.docker.distribution.manifest.v2+json

Platform: linux/amd64

Name: docker.io/rtsypuk/mutiarch:latest@sha256:faad85867df328db47f97d60f3aa650c954d3d1f950a818d88c625389397b7ba

MediaType: application/vnd.docker.distribution.manifest.v2+json

Platform: linux/arm64

Name: docker.io/rtsypuk/mutiarch:latest@sha256:987173987f663f5ab31c1403155c23df039b331b6390b51a0c56df41a93fd575

MediaType: application/vnd.docker.distribution.manifest.v2+json

Platform: linux/arm/v7

Now lets pull the image on Raspberry PI (that is running on ARM). We can see that docker automatically detected the architecture and pulled ARM images. The container is up and running.

1

2

3

4

5

6

7

8

9

10

docker image inspect rtsypuk/mutiarch:latest

[

{

"Id": "sha256:f645992b6019a56f2770cb045576974a46599486df5883a51ec80a5db36857cf",

"RepoTags": [

"rtsypuk/mutiarch:latest"

],

"Architecture": "arm",

"Os": "linux",

"Size": 92377411,

If we start same container on x86 docker pulls x86 compatible image.

1

2

3

4

5

6

7

8

9

10

docker image inspect rtsypuk/mutiarch:latest

[

{

"Id": "sha256:96b8e0a7ea4db49400aac3b4b7ee7195c749080b46bdd7cb7272c966acfaac6a",

"RepoTags": [

"rtsypuk/mutiarch:latest"

],

"Architecture": "amd64",

"Os": "linux",

"Size": 125293711,

This is the beauty of multi-arch architecture - based on the current runtime and manifest declaration, docker pulls appropriate image - same application can run on different hardware.

2. Other options of Multi-arch builds for both x86 and arm platforms (kaniko)

Previous option requires to run buildx docker container, and it actually performs build of docker in docker. If we check running containers, among them there will be container running based on image moby/buildkit:buildx-stable-1:

1

2

3

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6168f4bb8935 moby/buildkit:buildx-stable-1 "buildkitd" 11 hours ago Up 11 hours buildx_buildkit_multi-platform-builder0

If you have limits and can not perform docker-in-docker build, there are other options. One of them is to use kaniko.

Here is the example of gitlab-ci.yml to use kaniko for multiarch builds:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

# define a job for building the containers

build-container:

stage: container-build

# run parallel builds for the desired architectures

parallel:

matrix:

- ARCH: amd64

- ARCH: arm64

tags:

# run each build on a suitable, preconfigured runner (must match the target architecture)

- runner-${ARCH}

image:

name: gcr.io/kaniko-project/executor:debug

entrypoint: [""]

script:

# build the container image for the current arch using kaniko

- >-

/kaniko/executor

--context "${CI_PROJECT_DIR}"

--dockerfile "${CI_PROJECT_DIR}/Dockerfile"

# push the image to the GitLab container registry, add the current arch as tag.

--destination "${CI_REGISTRY_IMAGE}:${ARCH}"

3. Push both images and manifests to ECR Registry

In case that your runner does not support ARM builds, you can perform parallel builds on x86-64 runner and arm-64 runner. After building images they should be pushed to registry with ARCH postfix in naming.

1

2

3

4

5

6

manifest-tool

- ./manifest-tool --username "${USER}" --password "${PASSWORD}" push from-args

--platforms linux/amd64,linux/arm64

--template ${REGISTRY}/${IMAGE_NAME}:${IMAGE_TAG}-ARCH

--tags ${IMAGE_TAG}

--target ${REGISTRY}/${IMAGE_NAME}:${IMAGE_TAG}

Manifest tool will assemble the manifest file and push to the registry, like docker buildx does. At that moment Registry will contain both images and also manifest specification that instructs which image to use on which platform.

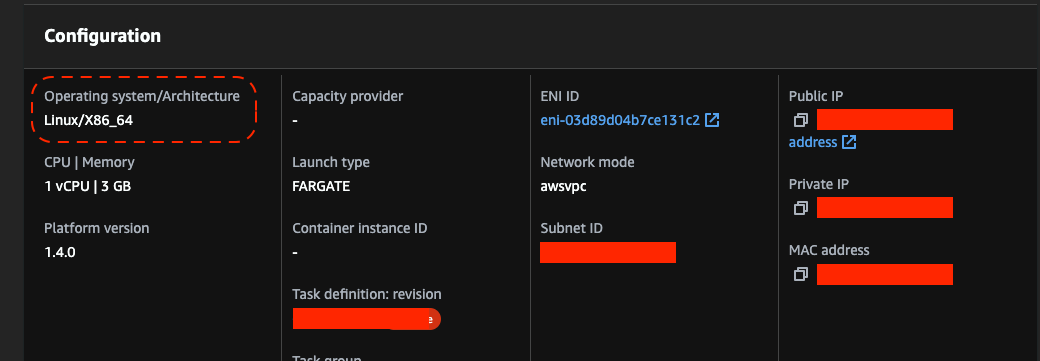

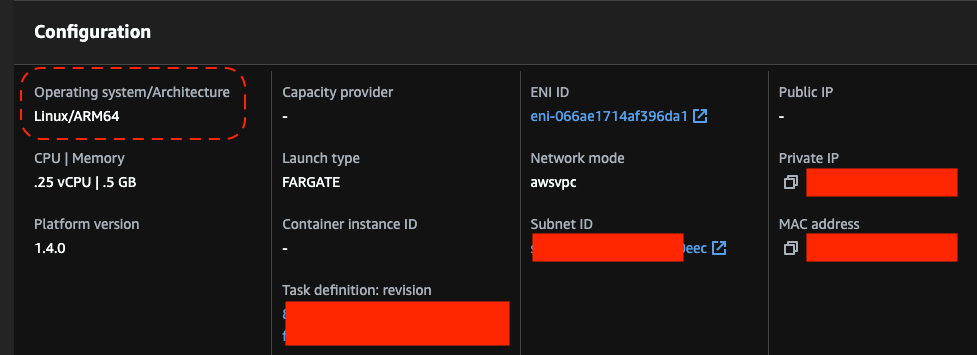

4. Change the Task definition

To use the ARM architecture in ECS, task definitions should be updated - we need to specify ARM64 for the cpuArchitecture task definition parameter. Same is applied to any IaC - terraform, CloudFormation, etc.

Task before the migration:

runtime Section of task definition that should be updated:

1

2

3

4

5

6

7

{

"runtimePlatform": {

"operatingSystemFamily": "LINUX",

"cpuArchitecture": "ARM64"

}

}

Final definition:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

{

"family": "arm64-testapp",

"networkMode": "awsvpc",

"containerDefinitions": [

{

"name": "arm-container",

"image": "arm64v8/busybox",

"cpu": 100,

"memory": 100,

"essential": true,

"command": [ "echo hello world" ],

"entryPoint": [ "sh", "-c" ]

}

],

"requiresCompatibilities": [ "FARGATE" ],

"cpu": "256",

"memory": "512",

"runtimePlatform": {

"operatingSystemFamily": "LINUX",

"cpuArchitecture": "ARM64"

},

"executionRoleArn": "arn:aws:iam::123456789012:role/ecsTaskExecutionRole"

}

Finally running ECS Fargate task on ARM Grabiton instance:

Comparison of billing for the same CPU/memory instances of different architecture

Now let’s do a math to check how much we win in decreasing the infrastructure costs of abstract container with 1CPU and 1GB RAM container.

Billing 1vCPU

| Platform | per vCPU per hour | per vCPU per day | per vCPU per month | per vCPU per year |

|---|---|---|---|---|

| Linux/X86_64 | $0.04048 | $0,97152 | 30,11712 | 361,40544 |

| Linux/ARM_64 | $0.03238 | $0,77712 | 24,09072 | 289,08864 |

Billing Memory 1GB

| Platform | per GB per hour | per GB per day | per GB per month | per GB per year |

|---|---|---|---|---|

| Linux/X86_64 | $0.004445 | 0,10668 | 2,56032 | 30,72384 |

| Linux/ARM_64 | $0.00356 | 0,08544 | 2,05056 | 24,60672 |

As we know the aggregated ECS task price consists of 3 values: vCPU, memory, ephemeral storage. Storage with size less 20GB is free, so probably all your workloads are billed only based on amount of vCPU and memory attached to tasks.

It is a significant decrease in billing by ~20% when migrating x86-64 to arm-64 architecture, staying on the same resources of CPU/memory.

Limitations and a Fallback

In case if there is specific workloads limitation that is tight to only x86 architecture (3rd party library that does not have portable library), having both images in the registry is a decision. Based on the runtime appropriate architecture image will be used.